Main publication

- Camilo Fosco, Anelise Newman, Vincent Casser, Allen Lee, Barry McNamara and Aude Oliva: “Multimodal Memorability: Modeling Effects of Semantics and Decay on Video Memorability.” European Conference on Computer Vision (ECCV’20). (full text)

Related publications

- Vincent Casser*, Camilo Fosco*, Anelise Newman*, Barry McNamara and Aude Oliva: “To Decay or not to Decay: Modeling Video Memorability Over Time.” Shared Visual Representations in Human and Machine Intelligence, NeurIPS’19.

Visit our Project Website

Model Demo

Abstract

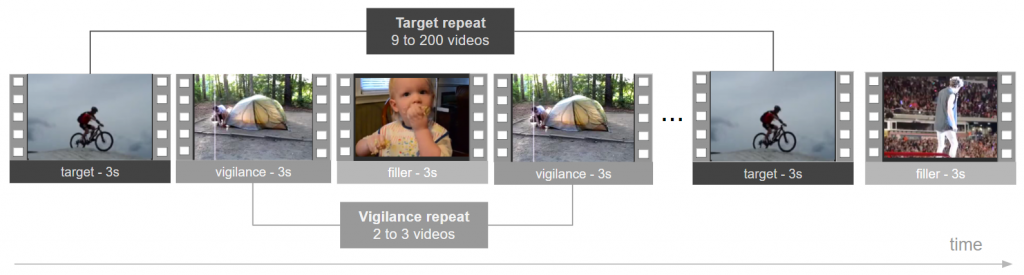

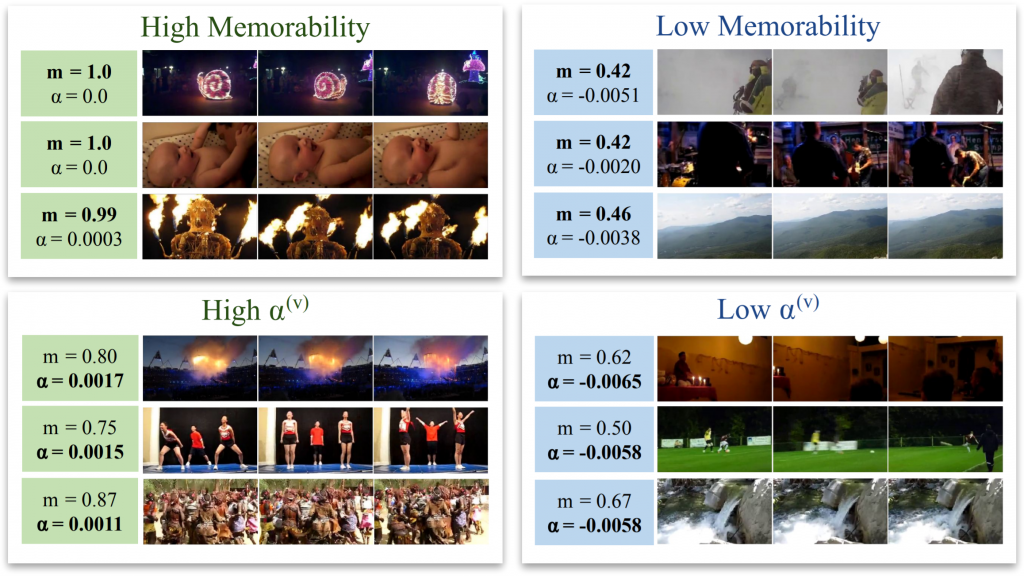

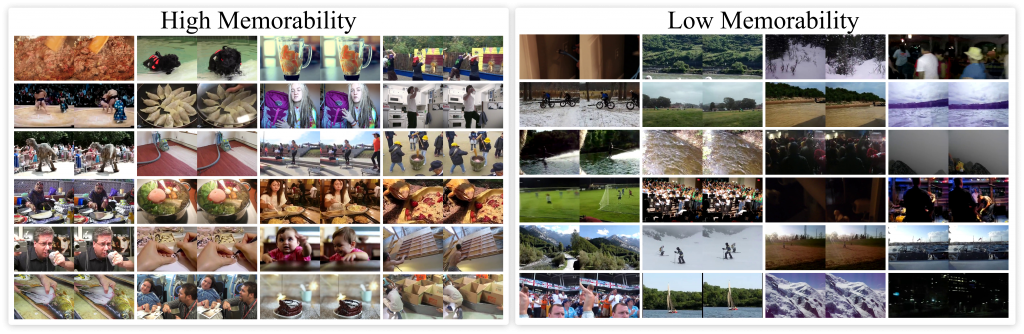

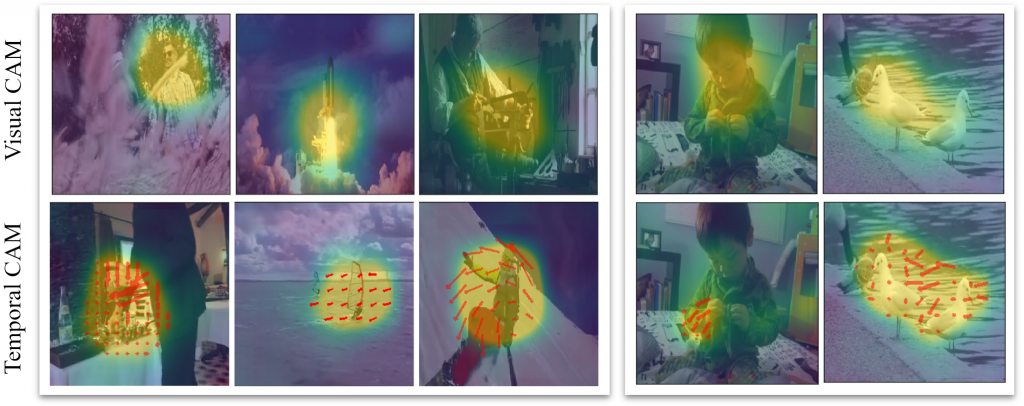

A key capability of an intelligent system is deciding when events from past experience must be remembered and when they can be forgotten. Towards this goal, we develop a predictive model of human visual event memory and how those memories decay over time. We introduce Memento10k, a new, dynamic video memorability dataset containing human annotations at different viewing delays. Based on our findings we propose a new mathematical formulation of memorability decay, resulting in a model that is able to produce the first quantitative estimation of how a video decays in memory over time. In contrast with previous work, our model can predict the probability that a video will be remembered at an arbitrary delay. Importantly, our approach is multimodal, combining visual and semantic information to fully represent the meaning of events. Our experiments on two video memorability benchmarks, including Memento10k, show that our model significantly improves upon the best prior approach (by 15% on average).

Supplementary Material