Main publications

- Guohao Li and Matthias Mueller, Vincent Casser, Neil Smith, Dominik Michels, Bernard Ghanem: “OIL: Observational Imitation Learning.” Robotics: Science and Systems (RSS’19). (full text)

Related publications

- Vincent Casser and Matthias Mueller, Neil Smith, Dominik Michels and Bernard Ghanem: “Teaching UAVs to Race: End-to-End Regression of Agile Controls in Simulation.” 2nd International Workshop on Computer Vision for UAVs, ECCV’18, 2018. Best paper award. (full text)

- Matthias Mueller, Guohao Li, Vincent Casser, Neil Smith, Dominik Michels, Bernard Ghanem: “Learning a Controller Fusion Network by Online Trajectory Filtering for Vision-based UAV Racing.” 3rd International Workshop on Computer Vision for UAVs, CVPR’19. (full text)

Visit our Project Website

Abstract

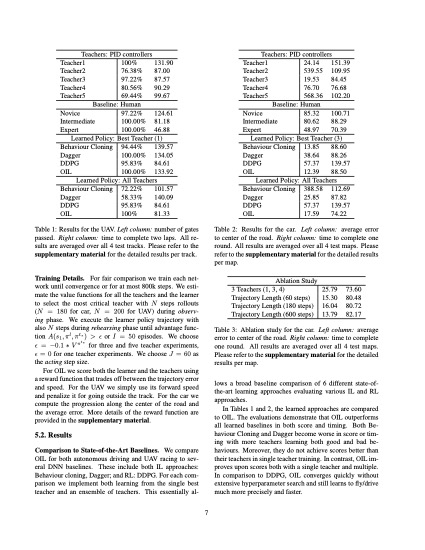

Recent work has explored the problem of autonomous navigation by imitating a teacher and learning an end-to-end policy, which directly predicts controls from raw images. However, these approaches tend to be sensitive to mistakes by the teacher and do not scale well to other environments or vehicles. To this end, we propose Observational Imitation Learning (OIL), a novel imitation learning variant that supports online training and automatic selection of optimal behavior by observing multiple imperfect teachers. We apply our proposed methodology to the challenging problems of autonomous driving and UAV racing. For both tasks, we utilize the Sim4CV simulator that enables the generation of large amounts of synthetic training data and also allows for online learning and evaluation. We train a perception network to predict waypoints from raw image data and use OIL to train another network to predict controls from these waypoints. Extensive experiments demonstrate that our trained network outperforms its teachers, conventional imitation learning (IL) and reinforcement learning (RL) baselines and even humans in simulation.

Contributions

(1) We propose Observational Imitation Learning (OIL) as a new approach for training a stationary deterministic policy that overcomes shortcomings of conventional imitation learning by incorporating reinforcement learning ideas. It learns from an ensemble of imperfect teachers, but only updates the policy with the best maneuvers of each teacher, eventually outperforming all of them.

(2) We introduce a flexible network architecture that adapts well to different control scenarios and complex navigation tasks (e.g. autonomous driving and UAV racing) using OIL in a self-supervised manner without any human training demonstrations.

(3) To the best of our knowledge, this paper is the first to apply imitation learning to multiple teachers while being robust to teachers that exhibit bad behavior.

|

|

|

|

|

|

|

|

|

|

|

|

|

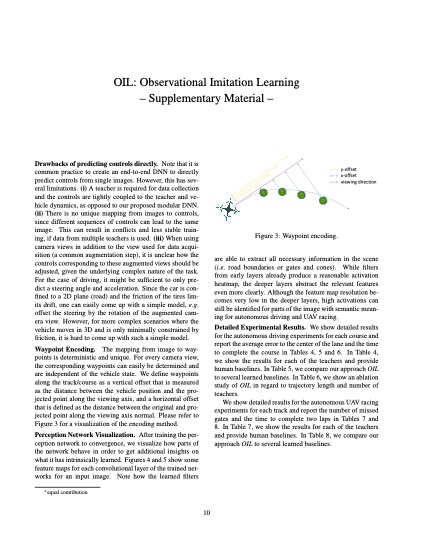

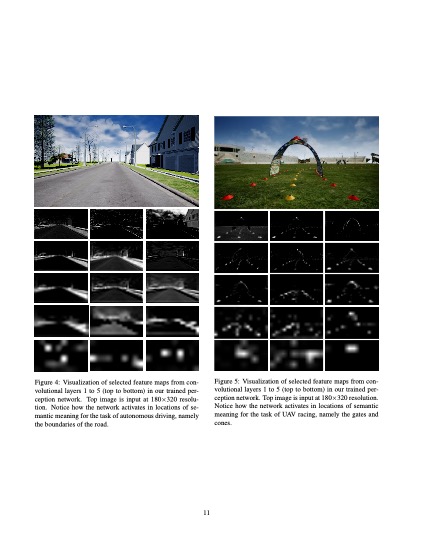

Supplementary Material

Vehicle Navigation

| Ours (OIL) | Reference (Behavioral Cloning) |

| Reference (Dagger) | Reference (DDPG) |

Drone Navigation

| Ours (OIL) | Reference (Behavioral Cloning) |

| Reference (Dagger) | Reference (DDPG) |