4D-Vision Workshop (ECCV’20)

Visual scene understanding is crucial for many practical applications in the real world. A lot of work has focused on 3D scene analysis. Considering the scene also in time, i.e. 4D visual understanding, is key for holistic understanding of the surrounding world. 3D scene analysis, 3D reconstruction, SLAM and others focus on the scene primarily without dynamic objects. At the same time video analysis has provided very advanced methods for understanding visual information in time. Recent research e.g. in perception for autonomous driving or embodied vision, have also started to consider both aspects of scene analysis. Gleaning insights from scene understanding, video analysis, and 3D modeling and sensing in the realms of both dynamic and static scenes, we hope to shed more light on the topic of 4D vision for scene understanding.

Autoencoders & VAEs (ComputeFest)

Convolutional Autoencoders have an extensive and successful record in applications such as representation learning, dimensionality reduction and learning data transformations. Recently, the concept of autoencoding has received even more attention with a rise of generative applications, which also make use of this extremely versatile concept. In this workshop, we will first introduce the concept and inner workings of autoencoders. We will then have a hands-on session with attendees implementing and training autoencoders on their own.

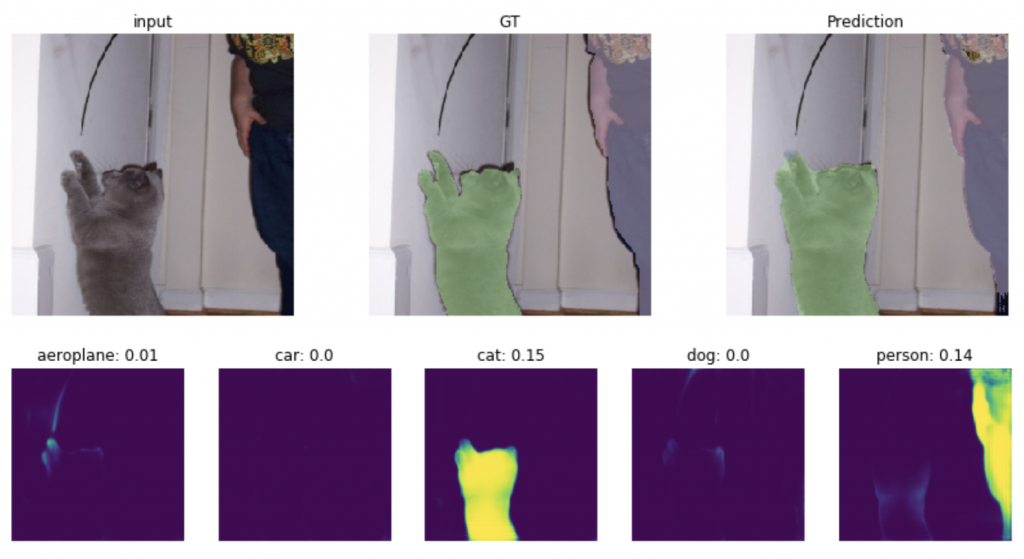

Transfer Learning (ComputeFest)

Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task. It is a popular approach in deep learning where pre-trained models are used as the starting point on computer vision and natural language processing tasks. This can be very important, given the vast computational and time resources required to develop neural network models on these problems and given the huge jumps in skill that these models can provide to related problems. In this part of the program we will examine various pre-existing models and techniques in transfer learning.

Bayesian GANs (Lab)

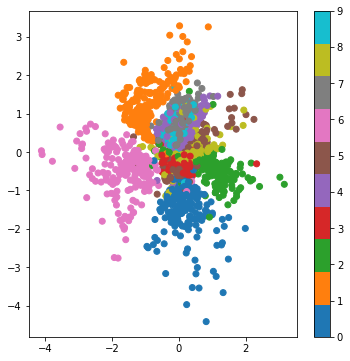

The paper Bayesian GAN by Y. Saatchi and A.G. Wilson introduces a new formulation for GANs that applies Bayesian techniques, and outperforms some of the current state-of-the-art approaches. It is a true generalization in that we can recover the original GAN formulation given a specific choice of parameters, but authors also show that this formulation allows to sample from a family of generator and discriminators which avoids mode collapse. Posteriors on the parameters of the generator and the disciminator are defined mathematically as a marginalization over the noise inputs. An algorithm is presented to sample from the posterior distributions defining generators and discriminators, using SGHMC and MC. We will first explain the intuition behind the paper, describe the most important mathematical underpinnings and apply the algorithm to a new, simple problem. Finally, we extend the model to a new, unreleased dataset and show how it performs in comparison to other state of the art methods.

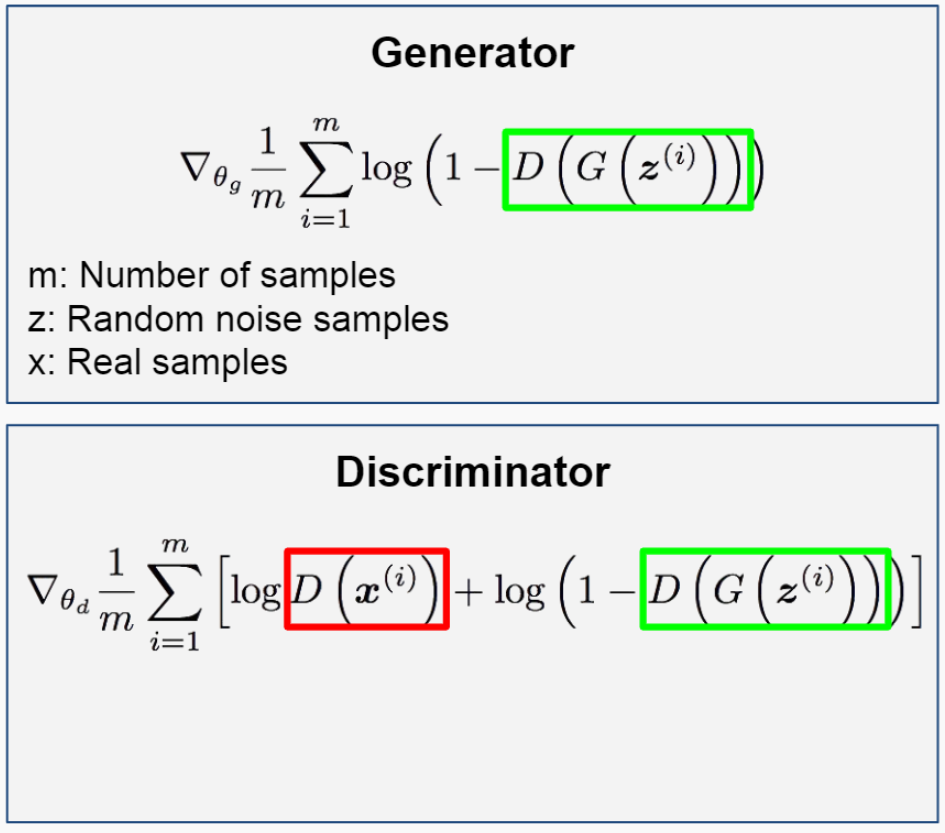

Generative Adversarial Networks (Lab)

We describe a minimalistic implementation of Generative Adversarial Networks (GANs) in Keras. We train a simple GAN for the task of face synthesis on the CelebA dataset. The goal of this is to enhance understanding of the concepts, and to give an easy to understand hands-on example.